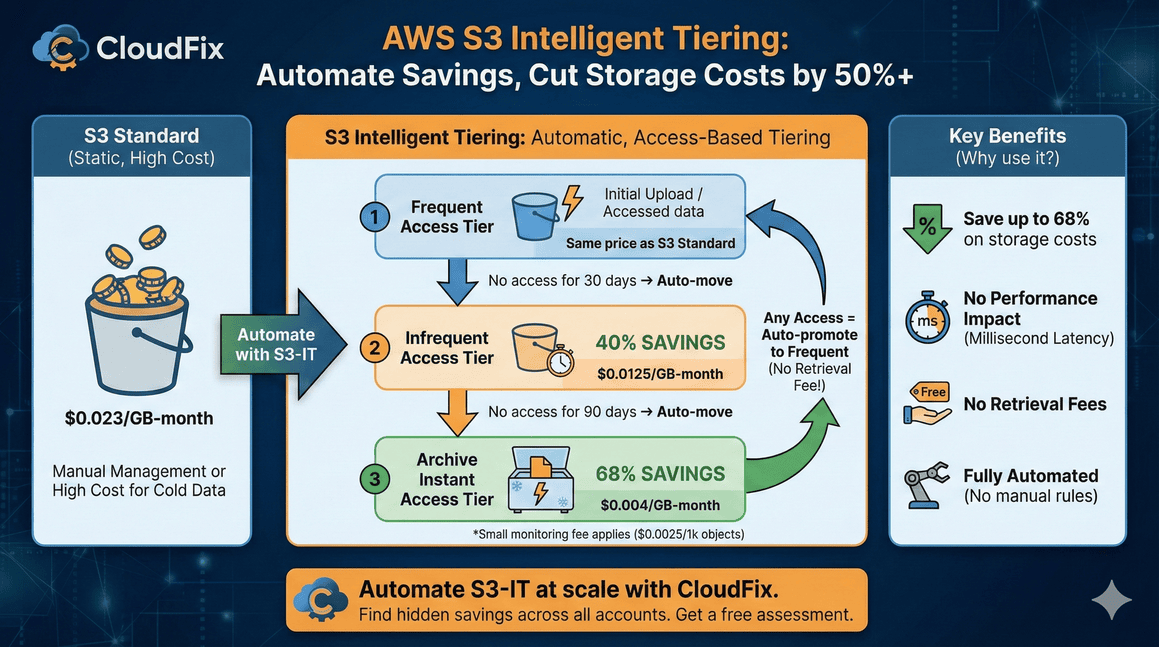

S3 Intelligent Tiering automatically moves your data between storage tiers based on access patterns saving up to 68% with no performance impact. Here's how to set it up.

S3 Intelligent Tiering: Cut Your AWS Storage Costs by 50% (2026 Guide)

S3 Intelligent Tiering can cut your AWS storage bill in half. It monitors how often you access each object and automatically moves data to cheaper storage tiers when access drops off. No retrieval fees. No performance hit. No manual lifecycle rules to manage.

Since AWS launched Intelligent Tiering in 2018, customers have saved over $6 billion compared to S3 Standard storage. That’s not marketing fluff, that’s the accumulated savings across millions of buckets. We’ve seen it ourselves at CloudFix, both at our sister companies, and at our customers.

This guide covers how S3 Intelligent Tiering actually works, when to use it, the real cost savings you can expect, and how to enable it across your accounts.

Quick Reference

| Savings: | 40% after 30 days, 68% after 90 days (automatic) |

| Retrieval fees: | None (instant access tiers) |

| Monitoring cost: | $0.0025 per 1,000 objects/month |

| Latency: | Same as S3 Standard (milliseconds) |

| Enable: | --storage-class INTELLIGENT_TIERING |

What is S3 Intelligent Tiering?

S3 Intelligent Tiering is a storage class that automatically shifts your objects between access tiers based on usage patterns. You don’t need to predict how often data will be accessed or write lifecycle rules. AWS handles the transitions for you.

Here’s the basic flow:

- Objects start in the Frequent Access tier (same price as S3 Standard)

- After 30 days without access, they move to Infrequent Access (40% cheaper)

- After 90 days without access, they move to Archive Instant Access (68% cheaper)

When you access an object in a lower tier, it automatically moves back to Frequent Access. No delays, no retrieval fees, no manual intervention.

The only cost is a small monitoring fee: $0.0025 per 1,000 objects per month. For most workloads, the savings far exceed this fee.

The Five Access Tiers

S3 Intelligent Tiering includes five tiers. Three are automatic; two are opt-in for archival workloads.

Automatic Tiers (No Configuration Needed)

| Tier | Moves After | Savings vs S3 Standard | Retrieval Time |

|---|---|---|---|

| Frequent Access | 0% | Milliseconds | |

| Infrequent Access | 30 days | 40% | Milliseconds |

| Archive Instant Access | 90 days | 68% | Milliseconds |

All three automatic tiers have the same latency and throughput as S3 Standard. Your applications won’t notice any difference.

Optional Archive Tiers (Opt-In Required)

| Tier | Moves After | Savings vs S3 Standard | Retrieval Time |

|---|---|---|---|

| Archive Access | 90+ days (configurable) | ~90% | 3-5 hours |

| Deep Archive Access | 180+ days (configurable) | ~95% | Up to 12 hours |

These tiers use Glacier infrastructure. The savings are significant, but retrieval takes hours, not milliseconds. Only enable these if your application can handle asynchronous data access.

Our recommendation: Stick to the automatic tiers unless you have genuine archival data that won’t need quick access. The instant-access tiers deliver substantial savings without changing how your applications work.

S3 is just one of 32 cost optimizations you’re probably missing.

We’ve compiled the highest-impact AWS savings into a single checklist — including storage, compute, database, and networking fixes. Each item includes “how to spot this” guidance.

Get the Full ChecklistS3 Intelligent Tiering Pricing (2026)

Current pricing for US East (N. Virginia). Check the AWS pricing page for your region.

Storage Costs

| Tier | Cost per GB/Month | Relative to S3 Standard |

|---|---|---|

| Frequent Access (first 50 TB) | $0.023 | 100% |

| Infrequent Access | $0.0125 | 54% |

| Archive Instant Access | $0.004 | 17% |

| Archive Access | $0.0036 | 16% |

| Deep Archive Access | $0.00099 | 4% |

Monitoring Fee

$0.0025 per 1,000 objects per month

Objects smaller than 128 KB are exempt from monitoring fees and stay in the Frequent Access tier.

No Retrieval Fees

Unlike S3 Glacier storage classes, Intelligent Tiering charges no retrieval fees for the automatic tiers. Access your data whenever you need it at no extra cost.

Real-World Savings Calculation

What does this look like in practice? Based on data from thousands of S3 buckets, here’s a typical distribution once objects settle into their access patterns:

| Tier | Typical Distribution | Per-GB Cost | Monitoring Cost |

|---|---|---|---|

| Frequent Access | 10% | $0.023 | $0.0025 per 1K objects |

| Infrequent Access | 20% | $0.0125 | $0.0025 per 1K objects |

| Archive Instant Access | 70% | $0.004 | $0.0025 per 1K objects |

Let’s run the numbers for 1,000 objects at 1 MB each (1 TB total):

S3 Standard: 1,000 GB × $0.023 = $23.00/month

S3 Intelligent Tiering:

- Frequent Access: 100 GB × $0.023 = $2.30

- Infrequent Access: 200 GB × $0.0125 = $2.50

- Archive Instant: 700 GB × $0.004 = $2.80

- Monitoring: 1,000 objects × $0.0025/1K = $0.0025

- Total: $7.60/month

That’s a 67% reduction in storage costs for data with typical access patterns.

Your savings depend on your actual access patterns. If every object gets accessed within 30 days, you won’t save anything (and you’ll pay the monitoring fee). But that’s rare. Most S3 buckets contain significant amounts of cold data that never gets touched after initial upload.

When to Use S3 Intelligent Tiering

Intelligent Tiering works well for:

- Data lakes and analytics: Query patterns are unpredictable

- User-generated content: Old uploads rarely accessed

- Application logs: Recent logs accessed frequently, older logs rarely

- Backup data: Accessed only during recovery

- Media archives: Some content stays popular, most doesn’t

- Any bucket where you don’t know access patterns: Let AWS figure it out

When NOT to Use S3 Intelligent Tiering

Skip Intelligent Tiering if:

- All data is accessed constantly: You’ll just pay monitoring fees with no savings

- Objects are mostly under 128 KB: Small objects exempt from monitoring, so no automatic tiering

- You have predictable access patterns: Manual lifecycle rules may be cheaper

- You need the lowest possible latency: S3 Express One Zone is faster (but more expensive)

How to Enable S3 Intelligent Tiering

You have three options: set it as the default for new objects, migrate existing objects, or use lifecycle rules.

Option 1: Set Default Storage Class for New Uploads

When uploading objects, specify the storage class:

aws s3 cp myfile.txt s3://mybucket/ --storage-class INTELLIGENT_TIERING

Or set it as the default for your bucket using a bucket policy.

Option 2: Migrate Existing Objects

Copy objects in place with the new storage class:

aws s3 cp s3://mybucket/ s3://mybucket/ --recursive --storage-class INTELLIGENT_TIERING

This creates new versions of each object with Intelligent Tiering enabled. Note: This generates PUT requests and data transfer within the same region (currently free for same-region transfers).

Option 3: Use S3 Lifecycle Rules

Create a lifecycle rule to transition objects to Intelligent Tiering:

{

"Rules": [

{

"ID": "MoveToIntelligentTiering",

"Status": "Enabled",

"Filter": {},

"Transitions": [

{

"Days": 0,

"StorageClass": "INTELLIGENT_TIERING"

}

]

}

]

}

This automatically moves new objects to Intelligent Tiering on upload. For existing objects, you can set Days: 1 to transition them the day after they’re created.

Option 4: Enforce via Bucket Policy

Block uploads that don’t use Intelligent Tiering:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "RequireIntelligentTiering",

"Effect": "Deny",

"Principal": "*",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::mybucket/*",

"Condition": {

"StringNotEquals": {

"s3:x-amz-storage-class": "INTELLIGENT_TIERING"

}

}

}

]

}

S3 Intelligent Tiering vs Lifecycle Rules

You might wonder: why not just use lifecycle rules to move data to cheaper storage classes?

Lifecycle rules work well when:

- You know exactly when data becomes cold

- Access patterns are predictable

- You’re comfortable with retrieval fees (for Glacier classes)

Intelligent Tiering works better when:

- Access patterns are unpredictable

- Some old data might need instant access

- You want zero retrieval fees

- You don’t want to manage lifecycle rule complexity

The key difference: lifecycle rules move data on a schedule you define. Intelligent Tiering moves data based on actual access. If a “cold” object suddenly gets accessed, lifecycle rules don’t know and the object stays in cheap storage with expensive retrieval. Intelligent Tiering automatically promotes it back to the fast tier.

Enabling Archive Tiers (Optional)

The Archive Access and Deep Archive Access tiers are disabled by default. To enable them:

aws s3api put-bucket-intelligent-tiering-configuration \

--bucket mybucket \

--id "ArchiveConfig" \

--intelligent-tiering-configuration '{

"Id": "ArchiveConfig",

"Status": "Enabled",

"Tierings": [

{

"Days": 90,

"AccessTier": "ARCHIVE_ACCESS"

},

{

"Days": 180,

"AccessTier": "DEEP_ARCHIVE_ACCESS"

}

]

}'

Warning: Objects in Archive Access take 3-5 hours to retrieve. Deep Archive takes up to 12 hours. Your application code must handle asynchronous retrieval via RestoreObject. Don’t enable these tiers unless you’ve built that capability.

Common Questions

Does Intelligent Tiering affect performance?

No. The Frequent, Infrequent, and Archive Instant Access tiers all deliver the same millisecond latency as S3 Standard. Your application won’t notice any difference.

What’s the monitoring fee?

$0.0025 per 1,000 objects per month. For 1 million objects, that’s $2.50/month. For most workloads, the storage savings exceed this fee within the first month.

Are there retrieval fees?

No retrieval fees for the three automatic tiers. Only the optional Archive and Deep Archive tiers have retrieval costs (similar to S3 Glacier).

What about small objects?

Objects under 128 KB are exempt from monitoring fees and stay in the Frequent Access tier. Intelligent Tiering is most effective for larger objects.

Can I use Intelligent Tiering with S3 Versioning?

Yes. Each version is tiered independently based on its own access patterns.

How do I monitor tier distribution?

Use Amazon CloudWatch metrics or AWS Cost and Usage Reports to see how your data is distributed across tiers.

Does Intelligent Tiering work with S3 Replication?

Yes. Replicated objects use the storage class you specify in the replication rule. You can replicate to Intelligent Tiering in the destination bucket.

The Challenge: Enabling at Scale

Enabling Intelligent Tiering on one bucket is straightforward. Doing it across hundreds of buckets, multiple AWS accounts, and ensuring new buckets use it by default and that’s where it gets complicated.

Most organizations we work with have:

- Dozens or hundreds of S3 buckets

- Multiple AWS accounts

- New buckets created constantly by different teams

- No visibility into which buckets are optimized

This is where automation pays off. Manual configuration works for a handful of buckets. At scale, you need continuous monitoring to catch new buckets and identify which existing buckets would benefit most from Intelligent Tiering.

How CloudFix Automates S3 Intelligent Tiering

CloudFix continuously monitors your AWS accounts for S3 optimization opportunities. Our Intelligent Tiering finder identifies buckets that aren’t using optimal storage classes and calculates your potential savings.

When CloudFix finds an opportunity, it generates a Change Manager request that you can review and approve. One click enables Intelligent Tiering across all identified buckets safely, with full audit trails via CloudTrail.

No scripts to write. No manual bucket-by-bucket configuration. No buckets slipping through the cracks.

Get a free savings assessment to see how much you’re currently overspending on S3 storage.

Related Resources:

- AWS Documentation: S3 Intelligent-Tiering

- AWS S3 Pricing Page

- CloudFix Support: Enable S3 Intelligent-Tiering

Ready to see your actual S3 savings?

CloudFix scans your buckets and shows exactly which ones should be on Intelligent Tiering — plus 30+ other optimizations. Free assessment, no commitment.